The rapid and accurate analysis of ion concentrations in mixed salt solutions is a critical aspect of utilizing salt lake chemical resources. To explore an efficient and non-destructive detection method, this study proposes a deep learning model that fuses a Convolutional Neural Network (CNN), a Long Short-Term Memory (LSTM) network, and an Attention mechanism for the prediction of salt solution concentrations using Near-Infrared Spectroscopy (NIRS). First, single-component and two-component mixed salt solution samples of NaCl, KCl, and MgCl2 were prepared, and their near-infrared spectral data were collected. After applying Savitzky-Golay smoothing and derivative preprocessing to the spectra, a CNN-LSTM-Attention prediction model was constructed. A comparative analysis was conducted against common models such as Partial Least Squares Regression (PLSR), Support Vector Regression (SVR), and Random Forest (RF), and an ablation study was performed to analyze the contribution of each deep learning module. The results show that for single-component salt solutions, the proposed model's performance is comparable to that of the high-performing SVR and RF models. In complex mixed solutions with severe spectral overlap, the CNN-LSTM-Attention model demonstrated significant superiority, with its prediction accuracy surpassing all traditional baseline models across all mixed datasets, achieving a coefficient of determination (R2) as high as 0.973. The study concludes that the proposed CNN-LSTM-Attention model can effectively address the challenge of spectral overlap, demonstrating the potential of using deep learning for the quantitative analysis of complex mixture systems via near-infrared spectroscopy.

| Published in | International Journal of Energy and Environmental Science (Volume 10, Issue 5) |

| DOI | 10.11648/j.ijees.20251005.12 |

| Page(s) | 120-128 |

| Creative Commons |

This is an Open Access article, distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution and reproduction in any medium or format, provided the original work is properly cited. |

| Copyright |

Copyright © The Author(s), 2025. Published by Science Publishing Group |

Near-Infrared Spectroscopy, Ion Concentration Prediction, Convolutional Neural Network, Long Short-Term Memory Network, Attention Mechanism, Spectral Analysis Introduction

Types of salt solution | Concentration (mol/L) | Number of samples |

|---|---|---|

NaCl | 0.2~5 | 200 |

KCl | 0.1~4 | 242 |

MgCl2 | 0.1~4 | 259 |

NaCl + KCl | 0.1~4 | 284 |

KCl + MgCl2 | 1~4 | 218 |

MgCl2 + NaCl | 0.1~3.6 | 227 |

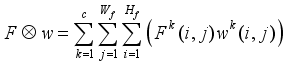

(1)

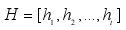

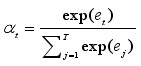

(1)  ) output by the LSTM layer is input into a fully connected layer to calculate an alignment score et for each hidden state ht. This score measures the importance of each feature. Next, the softmax function is used to normalize the alignment scores et to obtain attention weights αt. The sum of all weights is 1, forming a probability distribution. Finally, the obtained attention weights αt are used for a weighted sum with the corresponding LSTM hidden states ht to generate a context vector c that contains globally important information. This vector is then fed into subsequent fully connected layers for the final concentration prediction. The relevant formulas are as follows

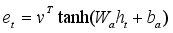

) output by the LSTM layer is input into a fully connected layer to calculate an alignment score et for each hidden state ht. This score measures the importance of each feature. Next, the softmax function is used to normalize the alignment scores et to obtain attention weights αt. The sum of all weights is 1, forming a probability distribution. Finally, the obtained attention weights αt are used for a weighted sum with the corresponding LSTM hidden states ht to generate a context vector c that contains globally important information. This vector is then fed into subsequent fully connected layers for the final concentration prediction. The relevant formulas are as follows  (2)

(2)  (3)

(3)  (4)

(4)  (5)

(5)

(6)

(6) NaCl | KCl | MgCl2 | NaCl+ KCl | KCl+ MgCl2 | MgCl2+NaCl | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

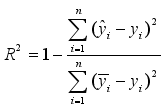

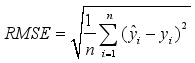

R2 | RMSE | R2 | RMSE | R2 | RMSE | R2 | RMSE | R2 | RMSE | R2 | RMSE | |

PLSR | 0.967 | 0.280 | 0.958 | 0.238 | 0.978 | 0.164 | 0.738 | 0.607 | 0.939 | 0.221 | 0.965 | 0.185 |

SVR | 0.971 | 0.230 | 0.967 | 0.177 | 0.986 | 0.121 | 0.777 | 0.669 | 0.968 | 0.143 | 0.959 | 0.194 |

RF | 0.964 | 0.253 | 0.967 | 0.181 | 0.984 | 0.131 | 0.825 | 0.444 | 0.953 | 0.174 | 0.975 | 0.153 |

CNN+LSTM+Attention | 0.968 | 0.213 | 0.972 | 0.168 | 0.984 | 0.147 | 0.925 | 0.340 | 0.973 | 0.146 | 0.969 | 0.180 |

NaCl | KCl | MgCl2 | NaCl+ KCl | KCl+ MgCl2 | MgCl2+NaCl | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

R2 | RMSE | R2 | RMSE | R2 | RMSE | R2 | RMSE | R2 | RMSE | R2 | RMSE | |

CNN | 0.901 | 0.423 | 0.916 | 0.288 | 0.893 | 0.337 | 0.494 | 0.818 | 0.513 | 0.588 | 0.803 | 0.427 |

CNN+LSTM | 0.943 | 0.321 | 0.947 | 0.230 | 0.937 | 0.259 | 0.835 | 0.451 | 0.921 | 0.225 | 0.883 | 0.329 |

CNN+LSTM+Attention | 0.968 | 0.208 | 0.972 | 0.168 | 0.984 | 0.147 | 0.925 | 0.340 | 0.973 | 0.146 | 0.969 | 0.180 |

NIRS | Near-Infrared Spectroscopy |

CNN | Convolutional Neural Network |

LSTM | Long Short-Term Memory |

Attention | Attention Mechanism |

PLSR | Partial Least Squares Regression |

SVR | Support Vector Regression |

RF | Random Forest |

PCR | Principal Component Regression |

SG | Savitzky-Golay (A spectral preprocessing method) |

RNN | Recurrent Neural Network |

SVM | Support Vector Machine |

Conv1D | 1D Convolutional Layer |

R2 | Coefficient of Determination |

RMSE | Root Mean Square Error |

| [1] | Chen, R., Li, S., Cao, H., Xu, T., Bai, Y., Li, Z.,... & Huang, Y. Rapid quality evaluation and geographical origin recognition of ginger powder by portable NIRS in tandem with chemometrics. Food Chemistry, 2024, 438, 137931. |

| [2] | Nagy, M. M., Wang, S., & Farag, M. A. Quality analysis and authentication of nutraceuticals using near IR (NIR) spectroscopy: A comprehensive review of novel trends and applications. Trends in Food Science & Technology, 2022, 123, 290-309. |

| [3] | Carvalho, J. K., Moura-Bueno, J. M., Ramon, R., Almeida, T. F., Naibo, G., Martins, A. P.,... & Tiecher, T. Combining different pre-processing and multivariate methods for prediction of soil organic matter by near infrared spectroscopy (NIRS) in Southern Brazil. Geoderma Regional, 2022, 29, e00530. |

| [4] | Giussani, B., Gorla, G., & Riu, J. Analytical chemistry strategies in the use of miniaturised NIR instruments: An overview. Critical Reviews in Analytical Chemistry, 2024, 54(1), 11-43. |

| [5] | Huang X, Luo Y P, Xia L. An efficient wavelength selection method based on the maximal information coefficient for multivariate spectral calibration. Chemometrics and Intelligent Laboratory Systems, 2019, 194: 103872. |

| [6] | Mishra P, Roger J M, Marini F, et al. Parallel pre-processing through orthogonalization (PORTO) and its application to near-infrared spectroscopy. Chemometrics and Intelligent Laboratory Systems, 2021, 212: 104190. |

| [7] | Yang J, Wang X, Wang R, et al. Combination of convolutional neural networks and recurrent neural networks for predicting soil properties using Vis–NIR spectroscopy. Geoderma, 2020, 380: 114616. |

| [8] | Acquarelli J, van Laarhoven T, Gerretzen J, et al. Convolutional neural networks for vibrational spectroscopic data analysis. Analytica chimica acta, 2017, 954: 22-31. |

| [9] | He T, Shi Y, Cui E, et al. Rapid detection of multi-indicator components of classical famous formula Zhuru Decoction concentration process based on fusion CNN-LSTM hybrid model with the near-infrared spectrum. Microchemical Journal, 2023, 195: 109438. |

| [10] | Zhang J, Ding J, Zhang Z, et al. Study on the inversion and spatiotemporal variation mechanism of soil salinization at multiple depths in typical oases in arid areas: A case study of Wei-Ku Oasis. Agricultural Water Management, 2025, 315: 109542. |

| [11] | Wu, R., Xue, J., Tian, H., & Dong, C. Qualitative discrimination and quantitative prediction of salt in aqueous solution based on near-infrared spectroscopy. Talanta, 2025, 281, 126751. |

| [12] | Zhang, B., Chen, J. H., & Jiao, M. X. Determination of Chloride Salt Solution by NIR Spectroscopy. Spectroscopy and Spectral Analysis, 2015, 35(7), 1840-1843. |

| [13] | Mishra, P., Biancolillo, A., Roger, J. M., Marini, F., & Rutledge, D. N. New data preprocessing trends based on ensemble of multiple preprocessing techniques. TrAC Trends in Analytical Chemistry, 2020, 132, 116045. |

| [14] | Engel, J., Gerretzen, J., Szymańska, E., Jansen, J. J., Downey, G., Blanchet, L., & Buydens, L. M. Breaking with trends in pre-processing?. TrAC Trends in Analytical Chemistry, 2013, 50, 96-106. |

| [15] | Arianti, N. D., Saputra, E., & Sitorus, A. An automatic generation of pre-processing strategy combined with machine learning multivariate analysis for NIR spectral data. Journal of Agriculture and Food Research, 2023, 13, 100625. |

| [16] | Rinnan, Å., Van Den Berg, F., & Engelsen, S. B. Review of the most common pre-processing techniques for near-infrared spectra. TrAC Trends in Analytical Chemistry, 2009, 28(10), 1201-1222. |

| [17] | Rehman Y A U, Po L M, Liu M. LiveNet: Improving features generalization for face liveness detection using convolution neural networks. Expert Systems with Applications, 2018, 108: 159-169. |

| [18] | Pengyou F, Yue W, Yuke Z, et al. Deep learning modelling and model transfer for near-infrared spectroscopy quantitative analysis. Spectroscopy and Spectral Analysis, 2023, 43(1): 310-319. |

| [19] | Zeng S, Zhang Z, Cheng X, et al. Prediction of soluble solids content using near-infrared spectra and optical properties of intact apple and pulp applying PLSR and CNN. Spectrochimica Acta Part A: Molecular and Biomolecular Spectroscopy, 2024, 304: 123402. |

| [20] | Chung W H, Gu Y H, Yoo S J. District heater load forecasting based on machine learning and parallel CNN-LSTM attention. Energy, 2022, 246: 123350. |

| [21] | Yang Y, Xiong Q, Wu C, et al. A study on water quality prediction by a hybrid CNN-LSTM model with attention mechanism. Environmental Science and Pollution Research, 2021, 28(39): 55129-55139. |

| [22] | WU, T. Research on Quantitative Analysis Method of Near-Infrared Spectroscopy Based on Deep Learning. Ph.D. Thesis, Beijing University of Chemical Technology, Beijing, China, 2025. |

| [23] | Shengfang L, Minzhi J, Daming D. Fast Measurement of Sugar in Fruits Using Near Infrared Spectroscopy Combined with Random Forest Algorithm. Spectroscopy and Spectral Analysis, 2018, 38(6): 1766-1771. |

APA Style

Pei, Z., Zhao, J., Wang, N. (2025). A Study on a Salt Solution Concentration Prediction Method Based on CNN-LSTM-Attention. International Journal of Energy and Environmental Science, 10(5), 120-128. https://doi.org/10.11648/j.ijees.20251005.12

ACS Style

Pei, Z.; Zhao, J.; Wang, N. A Study on a Salt Solution Concentration Prediction Method Based on CNN-LSTM-Attention. Int. J. Energy Environ. Sci. 2025, 10(5), 120-128. doi: 10.11648/j.ijees.20251005.12

@article{10.11648/j.ijees.20251005.12,

author = {Ziyang Pei and Jianfeng Zhao and Ningfeng Wang},

title = {A Study on a Salt Solution Concentration Prediction Method Based on CNN-LSTM-Attention

},

journal = {International Journal of Energy and Environmental Science},

volume = {10},

number = {5},

pages = {120-128},

doi = {10.11648/j.ijees.20251005.12},

url = {https://doi.org/10.11648/j.ijees.20251005.12},

eprint = {https://article.sciencepublishinggroup.com/pdf/10.11648.j.ijees.20251005.12},

abstract = {The rapid and accurate analysis of ion concentrations in mixed salt solutions is a critical aspect of utilizing salt lake chemical resources. To explore an efficient and non-destructive detection method, this study proposes a deep learning model that fuses a Convolutional Neural Network (CNN), a Long Short-Term Memory (LSTM) network, and an Attention mechanism for the prediction of salt solution concentrations using Near-Infrared Spectroscopy (NIRS). First, single-component and two-component mixed salt solution samples of NaCl, KCl, and MgCl2 were prepared, and their near-infrared spectral data were collected. After applying Savitzky-Golay smoothing and derivative preprocessing to the spectra, a CNN-LSTM-Attention prediction model was constructed. A comparative analysis was conducted against common models such as Partial Least Squares Regression (PLSR), Support Vector Regression (SVR), and Random Forest (RF), and an ablation study was performed to analyze the contribution of each deep learning module. The results show that for single-component salt solutions, the proposed model's performance is comparable to that of the high-performing SVR and RF models. In complex mixed solutions with severe spectral overlap, the CNN-LSTM-Attention model demonstrated significant superiority, with its prediction accuracy surpassing all traditional baseline models across all mixed datasets, achieving a coefficient of determination (R2) as high as 0.973. The study concludes that the proposed CNN-LSTM-Attention model can effectively address the challenge of spectral overlap, demonstrating the potential of using deep learning for the quantitative analysis of complex mixture systems via near-infrared spectroscopy.

},

year = {2025}

}

TY - JOUR T1 - A Study on a Salt Solution Concentration Prediction Method Based on CNN-LSTM-Attention AU - Ziyang Pei AU - Jianfeng Zhao AU - Ningfeng Wang Y1 - 2025/09/25 PY - 2025 N1 - https://doi.org/10.11648/j.ijees.20251005.12 DO - 10.11648/j.ijees.20251005.12 T2 - International Journal of Energy and Environmental Science JF - International Journal of Energy and Environmental Science JO - International Journal of Energy and Environmental Science SP - 120 EP - 128 PB - Science Publishing Group SN - 2578-9546 UR - https://doi.org/10.11648/j.ijees.20251005.12 AB - The rapid and accurate analysis of ion concentrations in mixed salt solutions is a critical aspect of utilizing salt lake chemical resources. To explore an efficient and non-destructive detection method, this study proposes a deep learning model that fuses a Convolutional Neural Network (CNN), a Long Short-Term Memory (LSTM) network, and an Attention mechanism for the prediction of salt solution concentrations using Near-Infrared Spectroscopy (NIRS). First, single-component and two-component mixed salt solution samples of NaCl, KCl, and MgCl2 were prepared, and their near-infrared spectral data were collected. After applying Savitzky-Golay smoothing and derivative preprocessing to the spectra, a CNN-LSTM-Attention prediction model was constructed. A comparative analysis was conducted against common models such as Partial Least Squares Regression (PLSR), Support Vector Regression (SVR), and Random Forest (RF), and an ablation study was performed to analyze the contribution of each deep learning module. The results show that for single-component salt solutions, the proposed model's performance is comparable to that of the high-performing SVR and RF models. In complex mixed solutions with severe spectral overlap, the CNN-LSTM-Attention model demonstrated significant superiority, with its prediction accuracy surpassing all traditional baseline models across all mixed datasets, achieving a coefficient of determination (R2) as high as 0.973. The study concludes that the proposed CNN-LSTM-Attention model can effectively address the challenge of spectral overlap, demonstrating the potential of using deep learning for the quantitative analysis of complex mixture systems via near-infrared spectroscopy. VL - 10 IS - 5 ER -